Hadoop is a open source framework that is used to capture as well as manage and process data from distributed systems as it works on a cluster of nodes. One of the core components of hadoop is HDFS(Hadoop Distributed File System). It is used to scale a single cluster of hadoop to hundred and even more nodes and by these nodes more storage and data processing speeds can be achived.

Now we're going to configure Hadoop in Target-nodes using Ansible. In my last blog we have already used Ansible so, not explaining basic things again let's start with our ansible playbook:

Ansible-playbook for configuring Master/Name node:

---

- hosts: localhost

vars:

- ip: 0.0.0.0

- port: 9459

- dir: masternode

- node: name

tasks:

- name: Downloading Hadoop

command: wget https://archive.apache.org/dist/hadoop/core/hadoop-1.2.1/hadoop-1.2.1-1.x86_64.rpm

- name: Installing Hadoop

command: rpm -i hadoop-1.2.1-1.x86_64.rpm --force

- name: Downloading Java

command: wget http://35.244.242.82/yum/java/el7/x86_64/jdk-8u171-linux-x64.rpm

- name: Installing Java

command: rpm -i jdk-8u171-linux-x64.rpm

- name: Creating Directory

file:

name: /{{ dir }}

state: directory

- name: Configuring hdfs-site.xml

template:

src: /root/Ansible/hdfs-site.xml

dest: /etc/hadoop/hdfs-site.xmli

- name: Configuring core-site.xml

template:

src: /root/Ansible/core-site.xml

dest: /etc/hadoop/core-site.xml

- name: Formatting {{ node }}node

command: hadoop {{ node }}node -format

- name: Starting the {{ node }}node Server

command: hadoop-daemon.sh start {{ node }}node

- name: Checking Status

command: jps

register: status

- debug:

var: status

- name: Checking Report

command: hadoop dfsadmin -report

register: admin_report

- debug:

var: admin_report

The hdfs-site.xml and core-site.xml used in our playbook in controller node are:

hdfs-site.xml:

<?xml version="1.0"?><?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.{{ node }}.dir</name>

<value>/{{ dir }}</value>

</property>

</configuration>

core-site.xml:

<?xml version="1.0"?><?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://{{ ip }}:{{ port }}</value>

</property>

</configuration>

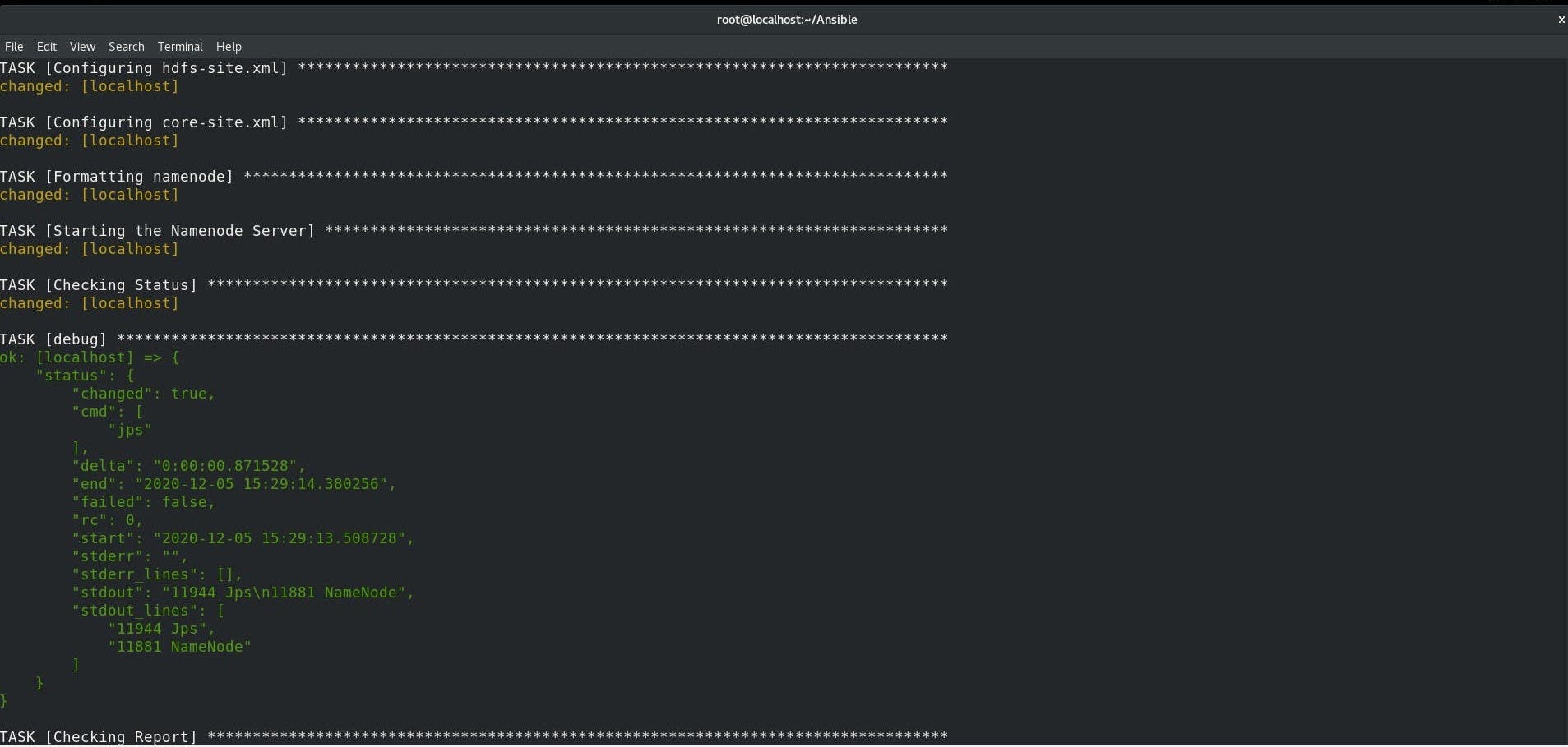

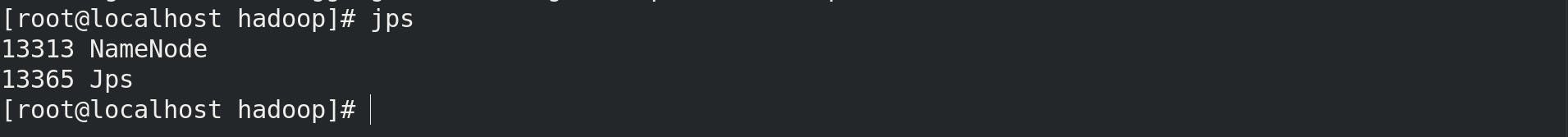

we can see that Master-node is successfully configured so, Let's check Hadoop services running or not:

Ansible-playbook for configuring Slave/Data node:

---

- hosts: localhost

vars:

- ip: 13.126.156.47

- port: 9459

- dir: slavenode

- node: data

tasks:

- name: Downloading Hadoop

command: wget https://archive.apache.org/dist/hadoop/core/hadoop-1.2.1/hadoop-1.2.1-1.x86_64.rpm

- name: Installing Hadoop

command: rpm -i hadoop-1.2.1-1.x86_64.rpm --force

- name: Downloading Java

command: wget http://35.244.242.82/yum/java/el7/x86_64/jdk-8u171-linux-x64.rpm

- name: Installing Java

command: rpm -i jdk-8u171-linux-x64.rpm

- name: Creating Directory

file:

name: /{{ dir }}

state: directory

- name: Configuring hdfs-site.xml

template:

src: /root/Ansible/hdfs-site.xml

dest: /etc/hadoop/hdfs-site.xmli

- name: Configuring core-site.xml

template:

src: /root/Ansible/core-site.xml

dest: /etc/hadoop/core-site.xml

- name: Starting the {{ node }}node Server

command: hadoop-daemon.sh start {{ node }}node

- name: Checking Status

command: jps

register: status

- debug:

var: status

Again, the hdfs-site.xml and core-site.xml used in our playbook in controller node are:

hdfs-site.xml:

<?xml version="1.0"?><?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.{{ node }}.dir</name>

<value>/{{ dir }}</value>

</property>

</configuration>

core-site.xml:

<?xml version="1.0"?><?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://{{ ip }}:{{ port }}</value>

</property>

</configuration>

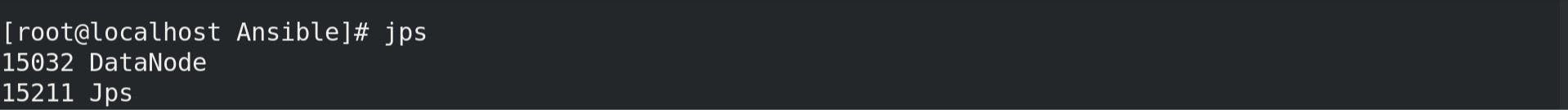

After this the Slave-node is successfully configured and check Hadoop services running or not:

We can see that Master-node and Slave-node are successfully configured. Special thanks to Mr. Vimal Daga sir for the knowledge.